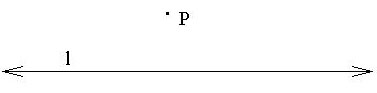

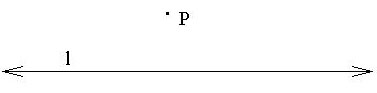

Consider a point p and a line l in some plane, with p not on l:

How many lines are there in the plane that pass through point p and

that are parallel to line l?

It seems clear, by what we mean by “point”, “line”, and “plane”, that

there is just one such line. This assertion is logically equivalent to

Euclid's 5th, or parallel, postulate (in the context of his other postulates).

In fact, this was seen as so obvious by everyone,

mathematicians included, that for two thousand years mathematicians attempted

to prove it. After all, since it “is true”, it should be provable. One

approach, taken independently by Carl F. Gauss, Janos Bolyai, and Nickolai Lobachevsky, was to

assume the negation of the “obvious truth” and attempt to arrive at a logical

contradiction. But they did not arrive at a contradiction. Instead, the

logical consequences went on and on. They included “theorems”, all provable

from the assumptions. In fact, they discovered what Bolyai called a “strange

new universe”, and what we today call non-Euclidean geometry.

The strange thing is that the real, physical

universe turns out to be non-Euclidean. Einstein's special theory of relativity

uses a geometry developed by H. Minkowski, and his general theory, a geometry

of Gauss and G. F. B. Riemann.[1]

Mathematics is about an imaginative universe—a

world of ideas, but the imagination is constrained by logic. The basic

idea behind proof in mathematics is that everything is exactly what its

definition says it is. A proof that something has a property is a demonstration

that the property follows logically from the definition alone. On the intuitive

level, definitions serve to lead our imagination. In a formal proof, however,

we are not allowed to use attributes of our imaginative ideas that don't

follow logically solely from definitions and axioms relating undefined

terms. This view of proof, articulated by David Hilbert, is accepted today

by the mathematical community, and is the basis for research mathematics

and graduate and upper-level undergraduate mathematics courses.

There is also a very satisfying aspect of

“proof” that comes from our ability to picture situations—and to draw inferences

from the pictures. In calculus, many, but not all, theorems have satisfactory

picture proofs. Picture proofs are satisfying, because they enable us to

see [2] the truth of the theorem. Rigorous

proof in the sense of Hilbert has an advantage, not shared by picture proofs,

that proof outlines are suggested by the very language in which theorems

are expressed. Thus both picture proofs and rigorous proofs have advantages.

Calculus is best seen using both types of proof.

A picture is an example of a situation

covered by a theorem. The theorem, of course, is not true about the actual

picture—the molecules of ink stain on the molecules of paper. It is true

about the idealization that we intuit from the picture. When we see that

the proof of a theorem follows from a picture, we see that the picture

is in some sense completely general—that we can't draw another picture

for which the theorem is false. To those mathematicians that are satisfied

only by rigorous mathematics, such a situation would merely represent proof

by lack of imagination: we can't imagine any situation essentially different

from

the situation represented by the picture, and we conclude that because

we can't imagine it that it doesn't exist. Non-Euclidean geometry, on which

Einstein's theory of relativity is based, is an example of a situation

where possibilities not imagined are, nevertheless, not logically excluded.

Gauss [3] kept his investigations in

this area secret for years, because he wanted to avoid controversy, and

because he thought that “the Boeotians”

[4]

would not understand. It is surely a profound thing that the universe,

while it may not be picturable to us, is nevertheless logical, and that

following the logical but unpicturable has unlocked deep truths about the

universe. In mathematics, unpicturable but logical results are sometimes

called counterintuitive.

Rigorous and picture proofs are both necessary

to a good course in calculus and both within grasp [5].

To insist on a rigorous proof, where a picture has made everything transparent,

is

deadening.

Mathematicians with refined intuition know that they could, if pressed,

supply such a proof—and it therefore becomes unnecessary to actually do

it. We therefore focus the method of outlining proofs on those theorems

for which there is no satisfactory picture proof.

Our purpose is not to cover all theorems of calculus,

but to do enough to enable a student to “catch on” to the method [6].

A detailed formal exposition of the method can be found in

Introduction to Proof in Abstract Mathematics and

Deductive Mathematics—An Introduction to Proof and Discovery. Second Edition.

In calculus texts, “examples” are given that

illustrate computational techniques, the use of certain ideas, or the solution

of certain problems. In this text supplement, we give “examples” that illustrate

the basic features of the method of discovering a proof outline.

FOOTNOTES

1. See Marvin Greenberg, Euclidean and Non-Euclidean

Geometries, W. H. Freeman, San Fransisco, 1980

(return to

text)

2. The word “theorem” literally means “object of a

vision”. (return to text)

3. One logical consequence of there being more than

one line through p parallel to l is that the sum of the number of degrees

in the angles of a triangle is less than 180. Gauss made measurements of

the angles formed by three prominent points, but his measurements were

inconclusive. We know today that the difference between the Euclidean 180

and that predicted for his triangle by relativity would be too small to

be picked up by his instruments.

(return to text)

4. A term of derision. Today's “Boetians” have derisive

terms of their own—claiming courses that depend on rigorous proof have

“rigor-mortis”. (return to text)

5. The fundamentals of discovering proof outlines

can be picked up in a relatively very short time—compared to the years

of study of descriptive mathematics prerequisite to calculus.

(return to text)

6. In the American Math. Monthly 102, May 1995, page

401, Charles Wells states: “A colleague of mine in computer science who majored

in mathematics as an undergraduate has described how as a student he suddenly

caught on that he could do at least B work in most math courses by merely rewriting

the definitions of the terms involved in the questions and making a few obvious

deductions.” The reason this worked for Prof. Wells's colleague is that definitions

are basic for deductive mathematics—and upper-level math courses are taught

from a deductive perspective. Our method for discovering proof steps is merely

a systematic way of making the deductions—a way that can be taught.

(return to text)